1 Terms

1 TermsHome > Terms > English, UK (UE) > Entropy

Entropy

In video, entropy, the average amount of information represented by a symbol in a message, is a function of the model used to produce that message and can be reduced by increasing the complexity of the model so that it better reflects the actual distribution of source symbols in the original message. Entropy is a measure of the information contained in a message, it’s the lower bound for compression.

This is auto-generated content. You can help to improve it.

0

0

Improve it

- Part of Speech: noun

- Synonym(s):

- Blossary:

- Industry/Domain: Entertainment

- Category: Video

- Company: Tektronix

- Product:

- Acronym-Abbreviation:

Other Languages:

Member comments

Terms in the News

Featured Terms

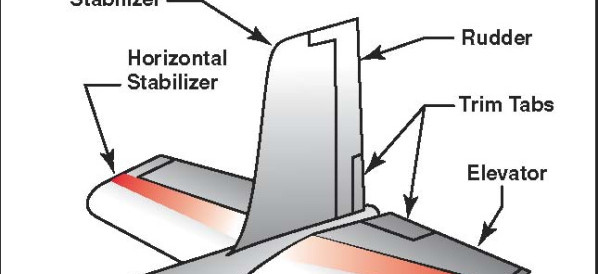

Industry/Domain: Aviation Category: General aviation

Elevator

A movable horizontal airfoil, usually attached to the horizontal stabiliser on the tail, that is used to control pitch. It usually changes the ...

Contributor

Featured blossaries

Browers Terms By Category

- Characters(952)

- Fighting games(83)

- Shmups(77)

- General gaming(72)

- MMO(70)

- Rhythm games(62)

Video games(1405) Terms

- Body language(129)

- Corporate communications(66)

- Oral communication(29)

- Technical writing(13)

- Postal communication(8)

- Written communication(6)

Communication(251) Terms

- Material physics(1710)

- Metallurgy(891)

- Corrosion engineering(646)

- Magnetics(82)

- Impact testing(1)

Materials science(3330) Terms

- Home theatre system(386)

- Television(289)

- Amplifier(190)

- Digital camera(164)

- Digital photo frame(27)

- Radio(7)

Consumer electronics(1079) Terms

- Industrial automation(1051)